美国农业部 /农业研究服务局南方平原农业研究中心,大学城,得克萨斯州 77845,美国

摘要: 数十年来,遥感技术一直被用作精准农业的重要数据采集工具。根据距离地面的高度,遥感平台主要包括卫星、有人驾驶飞机、无人驾驶飞机系统和地面车辆。这些遥感平台上搭载的绝大多数传感器是成像传感器,也可以安装激光雷达等其他传感器。近年来,卫星成像传感器的发展极大地缩小了基于飞机的成像传感器在空间、光谱和时间分辨率方面的差距。最近几年,作为低成本遥感平台的无人机系统的出现极大地填补了有人驾驶飞机与地面平台之间的间距。有人飞机具有飞行高度灵活、飞行速度快、载荷量大、飞行时间长、飞行限制少以及耐候性强等优势,因此在未来仍将是主要的精准农业遥感平台。本文的第1部分概述了遥感传感器的类型和三个主要的遥感平台(即卫星、有人驾驶飞机和无人驾驶飞机系统)。接下来的两个部分重点介绍用于精准农业的有人机载成像系统,包括由安装在农用飞机上的消费级相机组成的系统,并详细描述了部分定制和商用机载成像系统,包括多光谱相机、高光谱相机和热成像相机。第4部分提供了五个应用实例,说明如何将不同类型的遥感图像用于精准农业应用中的作物生长评估和作物病虫害管理。最后简要讨论了将不同遥感平台和成像系统用于精准农业上的一些挑战和未来的努力方向。

关键词: 机载成像系统;载人飞机;多光谱图像;高光谱图像;远红外图像;精准农业

Airborne remote sensing systems for precision agriculture applications

Chenghai Yang

U.S. Department of Agriculture, Agricultural Research Service, Southern Plans Agricultural Research Center, College Station, Texas 77845, USA

Abstract: Remote sensing has been used as an important data acquisition tool for precision agriculture for decades. Based on their height above the earth, remote sensing platforms mainly include satellites, manned aircraft, unmanned aircraft systems (UAS) and ground-based vehicles. A vast majority of sensors carried on these platforms are imaging sensors, though other sensors such as lidars can be mounted. In recent years, advances in satellite imaging sensors have greatly narrowed the gaps in spatial, spectral and temporal resolutions with aircraft-based sensors. More recently, the availability of UAS as a low-cost remote sensing platform has significantly filled the gap between manned aircraft and ground-based platforms. Nevertheless, manned aircraft remain to be a major remote sensing platform and offer some advantages over satellites or UAS. Compared with UAS, manned aircraft have flexible flight height, fast speed, large payload capacity, long flight time, few flight restrictions and great weather tolerance. The first section of the article provided an overview of the types of remote sensors and the three major remote sensing platforms (i.e., satellites, manned aircraft and UAS). The next two sections focused on manned aircraft-based airborne imaging systems that have been used for precision agriculture, including those consisting of consumer-grade cameras mounted on agricultural aircraft. Numerous custom-made and commercial airborne imaging systems were reviewed, including multispectral, hyperspectral and thermal cameras. Five application examples were provided in the fourth section to illustrate how different types of remote sensing imagery have been used for crop growth assessment and crop pest management for practical precision agriculture applications. Finally, some challenges and future efforts on the use of different platforms and imaging systems for precision agriculture were briefly discussed.

Key words: airborne imaging system; manned aircraft; multispectral imagery; hyperspectral imagery; thermal imagery; precision agriculture

CLC number: S-1 Documents code: A Article ID:201909-SA004

Citation:Yang Chenghai. Airborne remote sensing systems for precision agriculture applications[J]. Smart Agriculture, 2020, 2(1): 1-22. (in English with Chinese abstract)

引用格式:杨成海. 机载遥感系统在精准农业中的应用[J]. 智慧农业(中英文), 2020, 2(1): 1-22.

1 Major types of remote sensing systems and platforms

Over the last two decades, numerous commercial and custom-built airborne and high-resolution satellite imaging systems have been developed and deployed for perse remote sensing applications, including precision agriculture. More recently, unmanned aircraft systems (UAS) have emerged as a low-cost and versatile remote sensing platform. Although this article focuses on manned aircraft-based airborne imaging systems for precision agriculture, other major types of remote sensing systems and platforms were briefly reviewed in this section.

1.1 Remote sensor types

Remote sensing sensors, or simply remote sensors, are designed to detect and measure reflected and emitted electromagnetic radiation from a distance in the field of view of the sensor instrument. Remote sensors can be classified into non-imaging (e.g., spectroradiometers) and imaging (e.g., cameras). Imaging sensors are traditionally carried on earth-orbiting satellites and manned aircraft, while both non-imaging and imaging sensors can be handheld or mounted on ground-based vehicles. More recently, UAS have become a popular remote sensing platform due to their low cost, ease of deployment and low flight height to fill the gap between manned and ground-based platforms.

Imaging sensors (film based photographic camera, electro-optical sensor, passive microwave, radar) can provide views of a target area from vertical or nadir perspectives. Depending on the source of energy, imaging sensors can be classified into passive and active sensors. Passive sensors detect reflected sunlight or emitted thermal infrared and microwave energy, while active sensors, represented by imaging radar, provide their own energy source to a target and record the reflected radiation from the target. Electro-optical sensors are the main passive sensors being used in remote sensing. These passive sensors use detectors to convert the reflected and/or emitted radiation from a ground scene to electrical signals, which are then stored on magnetic, optical and/or solid-state media. Detectors have been designed with sensitivities for spectral intervals of interest in remote sensing, including regions of the near ultraviolet (UV), visible to near-infrared (VNIR), shortwave infrared (SWIR), mid-wave infrared (MWIR), and thermal infrared or longwave infrared (LWIR). The total span of the wavelengths that can be detected by electro-optical detectors extends from 0.3 to 15µm

[1]. The sensors operating in the visible to SWIR region can only be used under sunlit conditions. In contrast, thermal sensors have the capability to work with or without sunlight. A vast majority of remote sensors used for precision agriculture are electro-optical sensors with VNIR sensitivity, though thermal sensors are also commonly used.

Based on the number of spectral bands and band widths, imaging sensors can be classified into two categories: multispectral and hyperspectral. Multispectral imaging sensors typically measure reflected and/or emitted energy in 3 to 12 spectral bands, though most multispectral imaging systems measure reflectance in three visible bands (blue, green and red) and one near-infrared (NIR) band. In contrast, hyperspectral imaging sensors measure radiation in tens to hundreds of narrow spectral bands across the electromagnetic spectrum. Imagery from hyperspectral sensors contains much more spectral information than imagery from multispectral sensors, thus having the potential for more accurate differentiation and quantification of ground objects and features.

1.2 Remote sensing satellites

Traditional remote sensing satellites such as Landsat and SPOT series have long been used for crop identification[2], yield estimation[3,4] and pest detection over large geographic areas for agricultural applications[2]. However, this type of imagery has limited use for assessing within-field crop growth conditions for precision agriculture because of its coarse spatial resolution and long revisit time.

Recent advances in high-resolution satellite sensors have significantly narrowed the gap in spatial resolution between traditional satellite imagery and airborne imagery. Since the first high resolution commercial remote sensing satellite IKONOS was successfully launched into orbit in 1999, dozens of such satellite imaging systems with spatial resolutions of 10m or finer have been launched[3]. The short revisit time and fast data delivery combined with their large ground coverage make high-resolution satellite imagery attractive for many applications, especially for precision agriculture that requires high-resolution image data.

Most of the satellite sensors offer submeter panchromatic imagery and multispectral imagery in four spectral bands (blue, green, red and NIR) with spatial resolutions of 1 to 4m, including IKONOS, QuickBird, KOMPSAT-2 & -3, GeoEye-1, Pléiades-1A & -1B, SkySat, Gaofen-2, TripleSat, Cartosat-2C &-2D, GaoJing-1, and WorldView-4[4]. A few satellite sensors offer more spectral bands. For example, WorldView 2 provides eight bands in the visible to NIR region at 1.24m, while WorldView 3 offers a total of 28 bands, including the same eight spectral bands at 1.24m, eight SWIR bands (1195-2365nm) at 3.7m and 12 spectral bands (405-2245nm) at 30m. Other sensors offer multispectral imagery with 5 to 10m spatial resolutions, such as RapidEye (6.5m), SPOT 6 & 7 (6m), and Sentinel-2A & -2B (10m)[4]. Some of the earlier satellites such as IKONOS and QuickBird were deactivated or retired, but archived images from these satellites are still available.

Satellite remote sensing is rapidly advancing with many countries and commercial firms launching new satellite sensors. Planet Labs, Inc. (San Francisco, CA, USA) currently operates three different Earth-imaging constellations, PlanetScope, RapidEye and SkySat. PlanetScope consists of over 120 satellites to provide either RGB (red, green, blue) frame images or split-frame images with half RGB and half NIR at 3m spatial resolution. RapidEye has five satellites collecting multispectral imagery in the blue, green, red, red edge and NIR bands with 6.5m nominal resolution (resampled to 5m). SkySat with a constellation of 14 satellites captures 1m multispectral imagery (blue, green, red and NIR) and submeter panchromatic imagery. The complete PlanetScope constellation of approximately 130 satellites is able to image the entire land surface of Earth every day[4]. Yang[5] provided a review of over two dozen high-resolution satellite sensors and their applications for precision agriculture.

1.3 Manned aircraft-based imaging systems

Airborne imaging systems are traditionally referred to as imaging systems carried on manned aircraft, so imagery obtained from manned aircraft is commonly known as airborne or aerial imagery. Cameras or imaging systems used on manned aircraft are generally not restricted by their size and weight and they need to be operated or triggered by the pilot or a camera operator on the aircraft. A typical multispectral or hyperspectral imaging system consists of one or more cameras, a computer and a monitor for data acquisition and real-time visualization.

Depending on the types of remote sensors being used, some airplanes are specially outfitted with camera holes or ports in the underside of the airplane for mounting different imaging sensors. However, many times cameras are simply attached to the landing gear or hung out the window door using simple mounts. There are large numbers of business and private aircraft that can be used for remote sensing. Most aircraft for remote sensing fly below altitudes of about 3000m above mean sea level (MSL) where no oxygen mask or pressurization is needed. Included in this class are the common fixed-wing, propeller driven aircraft such as Cessna 206, 182 or 172. This class of aircraft is inexpensive to fly and is widely used throughout the world.

Agricultural aircraft are one particular group of manned aircraft used in many countries. There are thousands of agricultural aircraft used to apply crop production and protection materials in the U.S. alone. These aircraft can be equipped with imaging systems to monitor crop growing conditions, detect crop pests (weeds, diseases and insect damage) and assess the performance and efficacy of ground and aerial application treatments. This additional imaging capability will also increase the usefulness of manned aircraft and help aerial applicators generate additional revenue with remote sensing services.

Light sport aircraft (LSA) are a fairly new category of small, lightweight aircraft that are simple to fly. Different countries have their own specifications and regulations for LSA. For example, in the U.S., several distinct groups of aircraft may be flown as LSA. The U.S. Federal Aviation Administration (FAA) defines a LSA as an aircraft, other than a helicopter or powered-lift, that meets certain requirements, including a maximum gross takeoff weight of 600kg, a maximum stall speed of 83km/h, and a maximum speed in level flight of 220km/h among others

[6]. More detailed information on LSA and their licensing and certification can be found at the FAA website[7]. LSA aircraft have great potential as a flexible and economical platform for aerial imaging. They are less expensive than other manned aircraft, but do not have many of the restrictions that UAS have.

Helicopters can be used for low altitude imaging when the ability to hover and fly at low altitude are required. Since helicopters are more expensive to operate, UAS will be a much more economical alternative. There are also high-flying manned aircraft that have been used for airborne remote sensing. This class of aircraft can fly at much higher altitudes (e.g., 10,000m MSL) to provide large areal coverage with low spatial resolutions. As high-resolution satellite imagery with large coverage is widely available nowadays, high-flying aircraft may only be used for special tasks.

1.4 UAS-based imaging systems

UAS are a class of aircraft that is gaining popularity in the last few years. These small and portable aircraft are inexpensive and are able to take off and land where manned aircraft cannot. However, UAS-based cameras and imaging systems have the size and weight limitations, and more importantly, they can only be triggered remotely or automatically. Many cameras or sensors used on UAS are the same as, or similar to, those used on manned aircraft, though they are more compact and triggered differently.

Rotary and fixed-wing UAS are the two major types of UAS used today. A rotary UAS can take off and land vertically and hover stationary, but its flight time is short and speed is slow. In contrast, a fixed-wing UAS needs a launcher (unless it can be hand-launched) to take off and a runway to land, but it can fly longer time with faster speed. Nevertheless, all small UAS have the limitations on payload, flight time, flight range and wind tolerance. Most small UAS can only carry a small payload that restricts the type of cameras to be used, even though some UAS have the capability to carry multiple imaging systems. Small UAS are powered by batteries which typically have a flight time of 10-30 minutes. The flight range of a UAS also limits the radius that can be covered during each flight.

Nevertheless, UAS can capture images at very high spatial resolution, making it possible to assess crop growth conditions at leaf and plant levels for phenotyping and precision agriculture. In the last few years, UAS-based remote sensing has been increasingly used for crop phenotyping to estimate plant growth parameters such as seedling density[8], plant height[9,10], leaf area index[11], biomass[12,13] and yield[14-16]. Like imagery from manned aircraft and satellites, UAS-based imagery has become an important data source to assess crop health and pest conditions such as water stress[17], weed infestation[18,19], disease infection[20,21], nutrient status[22], and insect damage[23].

Despite their widespread use and fast-growing popularity, UAS have many flight restrictions due to their safety threat to the air space. Each country has its own rules and regulations pertaining to UAS operations. The safety concerns of commercial pilots, especially aerial applicators and other pilots operating in low-level airspace, need to be addressed. In the U.S., UAS pilots must follow the Part 107 guidelines set up by the U.S. FAA[24]. Some of the important operation restrictions include: 1) The unmanned aircraft with all payload must weigh less than 25kg; 2) The flight must be conducted within visual line of sight only; 3) Maximum allowable altitude is 122m above ground level (AGL); 4) Maximum ground speed is 100mph (161km/h); and 5) Operations in Class B, C, D and E airspace are allowed with the required air traffic control permission. The person operating a small UAS must either hold a remote pilot airman certificate or be under the direct supervision of a certified person. There are many other restrictions, though some of the restrictions can be waived if a certificate of waiver is granted.

Due to the current aviation regulations and their own limitations, UAS have been mainly used for image acquisition over research plots and relatively small crop areas. Until some of the UAS restrictions are changed for commercial applications, conventional manned aircraft remain to be an effective and versatile platform for airborne remote sensing. Although high resolution satellite sensors continue to narrow the gaps in spatial, spectral and temporal resolutions, airborne imaging systems still offer advantages over satellite imagery at a farm level due to their relatively low cost, high spatial resolution, easy deployment and real-time or near-real-time availability of imagery. Moreover, satellite imagery cannot always be acquired for a desired target area at a specified time due to satellite orbits, weather conditions and competition with other customers.

Imagery from manned aircraft is commonly known as airborne or aerial imagery. To make the distinction with imagery from manned aircraft, imagery from UAS is referred to as UAS imagery. Therefore, airborne imagery and airborne imaging systems mentioned in this article are related to manned aircraft. In the following sections, numerous airborne multispectral and hyperspectral imaging systems were reviewed and described. Application examples were provided to illustrate how airborne multispectral and hyperspectral imagery have been used for precision agriculture applications. Some limitations and future efforts on the selection and use of different types of remote sensing imagery for precision agriculture were discussed.

2 Airborne multispectral imaging systems

Airborne multispectral imaging systems have been widely used for precision agriculture since the 1990s due to their low cost, fine resolution, timeliness, and ability to obtain data in similar spectral bands to those by traditional satellite sensors[25,26]. Multispectral imaging systems typically employ multiple cameras and spectral filters to achieve multispectral bands. Most systems use three or four identical cameras with VNIR sensitivity to acquire RGB and color-infrared (CIR) imagery. For example, a four-band imaging system consists of four identical cameras equipped with four different bandpass filters to obtain blue, green, red and NIR bands. The cameras used are typically industrial cameras with each designed for one band. However, consumer-grade cameras have been increasingly used as a remote sensing tool in recent years with quality improvement and price reduction. The main advantage of consumer-grade cameras is that one single camera can be directly used to capture RGB imagery. Moreover, it can be modified to capture one NIR band or a combination of two visible bands and one NIR band. In this section, airborne multispectral imaging systems based on both industrial cameras and consumer-grade cameras were discussed.

2.1 Industrial cameras

Since 1990s, numerous airborne multispectral imaging systems (AMIS) have been developed and used for a variety of remote sensing applications, including rangeland and cropland assessment, precision agriculture, and pest management[27-30]. Most airborne multispectral imaging systems can provide image data with submeter resolution at 3-12 narrow spectral bands in the visible to NIR region of the spectrum[31-34].

Industrial cameras typically employ monochrome charge coupled device (CCD) sensors, though complementary metal oxide semiconductor (CMOS) sensors have been displacing CCD sensors in recent years because of their low cost and power consumption. Each camera in a multispectral system is usually equipped with a different bandpass filter. This approach has the advantage that each camera can be inpidually adjusted for optimum settings, but the inpidual band images need be registered or aligned with each other. Another approach is to use a beam splitting prism and multiple sensors built in one single camera to achieve multispectral imaging. One such system is the CS-MS1920 multispectral camera (Teledyne Optech, Inc., Vaughan, Ontario, Canada), which uses a beam splitting prism and three CCD sensors to acquire images in 3-5 spectral bands in the VNIR range. The advantage of this approach is that the inpidual band images are optically and mechanically aligned.

The USDA-ARS Aerial Application Technology Research Unit in College Station, Texas has developed and evaluated several airborne imaging systems for agricultural applications. Fig. 1 shows a four-camera multispectral imaging system (lower left) along with three other imaging systems which are described in other sections (The four imaging systems can be installed over the two camera ports on the USDA-ARS Cessna 206 to capture images simultaneously). The four-camera system consists of four monochrome CCD cameras and a computer equipped with a frame grabber and image acquisition software[34]

. The cameras are sensitive in the 400-1000nm spectral range and provide 12-bit images with 2048×2048 pixels. The four cameras are equipped with four bandpass interference filters with center wavelengths of 450nm, 550nm, 650nm, and 830nm, respectively. The band width of all the filters is 40nm.

Fig. 1 A four-camera imaging system (lower left) mounted together with a hyperspectral camera (upper left), a thermal camera (upper right), and two consumer-grade RGB and NIR cameras (lower right) owned by the USDA-ARS at College Station, Texas.

Airborne Data Systems, Inc. (Redwood Falls, Minnesota, USA) offers several airborne multispectral imaging systems. The Spectra-View system is a multispectral system designed to accommodate up to eight different cameras varying in size, format, and wavelength from UV to LWIR. A global positioning system/inertia navigation system (GPS/INS) unit is fully integrated with the system to provide precise, automated geo-registration of the images. For example, the Spectra-View 5WT system can acquire 12-bit images in six bands: blue (480-510nm), green (520-610nm), red (620-730nm), NIR (770-970nm), MWIR (3-5µm) and LWIR (8-14µm). The four VNIR images have 4872×3248 pixels, while the MWIR and LWIR images contain 640×512 pixels. The Spectra-View 9W captures 12-bit images with 9000×4872 pixels in the blue, green, red and NIR bands and in a panchromatic band (400-1000nm). The low-cost Agri-View system is designed to provide the same green, red and NIR bands as in Spectra-View 5WT. All the systems integrate proprietary preflight, mission planning, and image processing software[35].

Tetracam, Inc. (Chatsworth, California, USA) offers multispectral imaging systems in two product families. Each system in the agricultural digital camera (ADC) family consists of a single camera equipped with fixed filters at green, red and NIR wavelengths. The ADC, ADC Lite and ADC Micro systems offer 8- or 10-bit images with 2048×1536 pixels, while the ADC Snap captures images with 1280×1024 pixels. Systems in the multiple camera array (MCA) family contain four, six or twelve registered and synchronized cameras to simultaneously capture user-specified band images in VNIR wavelengths. The MCA systems are available in the standard Micro-MCA and Micro-MCA Snap versions.

Based on the Micro-MCA4 architecture, Tetracam’s RGB+3 system consists of one RGB camera and three monochrome cameras that sense three narrow bands centered at 680nm, 700nm, and 800nm. Tetracam’s Micro-MCA systems can also be configured with one or two FLIR TAU thermal sensors (FLIR Systems, Inc., Nashua, New Hampshire, USA) for imaging in the 7.5-13μm spectral range. The latest variant of the MCA series, the Macaw (MCAW: Multiple Camera Array Wireless), contains six 1280×1024 global snap shutter sensors with inpidual filtering. All Tetracam’s cameras are lightweight and compact and permit manual, auto-timed or remotely triggered exposures. All store images on memory cards (except the Macaw) and can continuously capture GPS coordinates for geotagging images. Tetracam’s PixelWrench2 software is used for managing and editing images.

In addition to its CS-MS1920 multispectral camera mentioned earlier, Teledyne Optech offers several medium-frame RGB cameras and thermal cameras either standalone or integrated with a lidar (light detection and ranging) system. For example, the RGB CS-10000 camera and the thermal CS-LW640 camera integrated with the Orion M300 lidar allow data acquisition for color and thermal imagery and 3D surface data. With 10,320 by 7,760 pixels, the CS-10000 delivers imagery for detailed feature visualization. The CS-LW640 is based on an uncooled microbolometer sensor with a resolution of 640×480 pixels. Integrated with the Orion C lidar sensor platform, it is a powerful tool for mapping thermal features in 3D[36].

FLIRs thermal cameras come in many series with sensor arrays up to 1280×1024 pixels in typical spectral ranges of 1-5μm, 3-5μm and 7.5-13μm. The FLIR SC660 camera owned by USDA-ARS in College Station (Fig. 1) is sensitive in 7.5 to 13μm to measure temperatures in the range of –40°C to 1500°C. It captures 14-bit thermal images with 640×480 pixel. FLIR T600-series cameras with uncooled microbolometer detectors feature 14bit images with 640×480 pixels in 7.5-13μm. The SC8000-series cameras feature cooled indium antimonide (InSb) detectors and high spatial resolution (1024×1024 or 1344×784 pixels) in 1.5-5μm or 3-5μm

[37].

ITRES Research Ltd. (Calgary, Alberta, Canada) offers the TABI-1800 thermal camera that is a broadband thermal imager with excellent sensitivity due to its stirling cycle cooled MCT (mercury cadmium telluride) detector, allowing users to distinguish temperature differences as low as one tenth of a degree while diminishing thermal drift as compared to bolometer-based systems. It captures 14-bit broadband images with a swath of 1800 pixels, the industry’s widest array, in the 3.7-4.8µm spectral range[38].

Phase One Industrial (Copenhagen, Denmark) provides high-end aerial imaging products. The Phase One 150MP 4-Band aerial system consists of two synchronized 150-megapixel (150MP) iXM-RS150F cameras (RGB and achromatic) mounted side by side on a plate, a Somag CSM40 modular stabilizer, an Applanix GPS/inertial measurement unit (IMU), an iX Controller computer and the iX Capture software. Each camera has a pixel array of 14204×10652 pixels. The software performs pixel alignment between the RGB and NIR images and the following image products can be generated in Tiff format: RGB, NIR, 4-band composite (RGBN), 3-band CIR (NRG). The other similar configuration is the Phase One 100MP 4-Band aerial system with two 100MP iXM-RS100F cameras. Phase One is also offering an advanced large format 190MP 4-Band system with two RGB cameras and one NIR camera to allow faster and more efficient execution of aerial imaging projects.

2.2 Consumer-grade cameras

With advances in imaging sensor technology, consumer-grade cameras have become an attractive tool for airborne remote sensing. Compared with industrial camera, such cameras are inexpensive, easy to use, and do not need to be connected to a PC for data acquisition. Consumer-grade cameras contain either a CCD or CMOS sensor that is fitted with a Bayer color filter to obtain true-color RGB images using one single sensor[39,40]. A Bayer filter mosaic is a color filter array for arranging RGB color filters on the sensor’s pixel array. The Bayer filter pattern assigns 50% of the pixels to green, 25% to red and 25% to blue. Each pixel is filtered to record only one of the three primary colors and various demosaicing algorithms are used to interpolate the red, green, and blue values for each pixel. Although pixel interpolation lowers the effective spatial resolution of each band image, the three band images are perfectly aligned. Consumer-grade digital cameras have been increasingly used by researchers on both manned

[41,42] and unmanned[43,44]aircraft for agricultural applications.

RGB imagery from consumer-grade camera alone is useful for many agricultural applications. Many vegetation indices (VIs) derived from RGB images have been used for crop assessment[11,21]. Nevertheless, most VIs, including the most commonly used normalized difference vegetation index (NDVI), require spectral information in a NIR band. Since most consumer-grade cameras are fitted with filters to block UV and infrared light, it is possible to replace the blocking filter with a long-pass NIR filter in front of the CCD or CMOS sensor to obtain NIR images.

Several companies offer infrared camera conversion services, and Life Pixel Infrared (Mukilteo, Washington, USA) and LDP LLC (Carlstadt, New Jersey, USA) are two of them. The NIR blocking filter is typically replaced by a 720nm or an 830nm long-pass filter. Since the Bayer filter mosaic is fused to the sensor substrate, the transmission profiles of the three Bayer RGB channels remain the same with the replaced long-pass NIR filter. However, all three channels in the converted camera only record NIR radiation. Although any of the three channels can be used as the NIR channel, the red channel typically has higher light intensity than the other two channels. Studies on the use of NIR-converted digital cameras have shown that such cameras combined with standard RGB cameras can be used as simple and affordable tools for plant stress detectionand crop monitoring[41,42].

Inexpensive and user-friendly imaging systems capable of capturing images at varying altitudes with manned and unmanned aircraft are needed for both research and practical applications. In the last few years, several single- and dual-camera imaging systems based on consumer-grade cameras have been developed for use on any traditional aircraft or agricultural aircraft at the USDA-ARS Southern Plains Agricultural Research Center in College Station, Texas[42-44]. Agricultural aircraft are widely used in the U.S. to apply crop production and protection materials. There are thousands of such aircraft in the U.S. and they are readily available as a versatile platform for airborne remote sensing. If equipped with appropriate imaging systems, these aircraft can be used to acquire airborne imagery for monitoring crop growing conditions, detecting crop pests (i.e., weeds, diseases and insect damage), and assessing the performance and efficacy of aerial application treatments. This additional imaging capability will increase the usefulness of these aircraft and help aerial applicators generate additional revenues from remote sensing services

[45].

The original USDA-ARS single-camera imaging system consisted of a Nikon D90 digital CMOS camera with a Nikon AF Nikkor 24mm f/2.8D lens (Nikon Inc., Melville, New York, USA) to capture RGB images with 4288×2848 pixels, a Nikon GP-1A GPS receiver (Nikon Inc., Melville, New York, USA) to geotag the image, and a Vello FreeWave wireless remote shutter release (Gradus Group LLC, New York, New York, USA) to trigger the camera. The fixed focal length (24mm) was selected similar to the width of the camera’s sensor area (23.6mm×15.8mm) so that the image width in ground distance was about the same as the flight height and the image height was about 2/3 of the flight height. For example, when the image is acquired at 1000m AGL, the image will cover approximately a ground area of 1000m×667m. As the Nikon D90 camera was discontinued, it has been replaced with the Nikon D7100, which has the same sensor area, but an array of 6000×4000 pixels. A new hähnel Captur Timer Kit (hähnel Industries Ltd., Bandon, Co. Cork, Ireland) is used to remotely trigger the camera at specified time intervals between consecutive shots.

The dual-camera system was assembled by adding a NIR-modified Nikon D7100 camera. To trigger the two cameras simultaneously, one hähnel Captur remote receiver was attached to each camera and one single hähnel Captur remote transmitter was used to start/stop image acquisition. One Nikon GP-1A GPS receiver is attached to each camera for geotagging. The two cameras can be attached via a camera mount to an aircraft with minimal or no modification to the aircraft. Both the single- and dual-camera systems have been attached to an Air Tractor 402B (Fig. 2) and several fixed-wing aircraft (i.e., Cessna 206, 182, and 172). These systems have been evaluated for crop pest detection and crop type identification[41,44].

Fig. 2 A two-camera imaging system developed at USDA-ARS Aerial Application Technology Research Unit in College Station, Texas, is attached to the left step of an Air Tractor 402B via a camera mount (yellow box)

Two other systems with a large sensor size were also assembled at USDA-ARS, College Station, Texas. One system consisted of two Canon EOS 5D Mark III digital cameras with a 5760×3840 pixel array (Canon USA Inc., Lake Success, New York, USA) as shown in Fig. 1. The other consisted of two Nikon D810 digital cameras with a 7360×4912 pixel array. These two imaging systems have the same sensor size (36mm×24mm) and focal length (20mm). At a flight height of 1000m AGL, the image covers a ground area of 1800m×1200m with a pixel size of 31cm for the Canon system and 24cm for the Nikon system. The cost for each of the two-camera systems was about $6,500. The two-camera systems have also been evaluated for precision agricultural applications[41,44,46,47].

As the demand for modified cameras increases, some companies offer modified consumer-grade cameras to capture blue-green-NIR or green-red-NIR (i.e., CIR) imagery with one single camera. For example, Life Pixel Infrared uses so-called NDVI filters with high in-band transmission to convert RGB cameras to capture blue, green and NIR light. LDP LLC provides modification services to convert RGB cameras to either blue-green-NIR 680-800nm or green-red-NIR 800-900nm (CIR) cameras. Furthermore, the two types of modified cameras can be tied together to capture five-band images simultaneously. Compared with modified NIR cameras that are not affected by visible light, the modified blue-green-NIR or green-red-NIR cameras could have the light contamination issue, depending on the filters and algorithms used to achieve band separation[48]. Although modified cameras have their advantages and potential for remote sensing applications, more research is needed to improve modification methods and to evaluate the performance of modified cameras.

3 Airborne hyperspectral imaging sensors

Hyperspectral imaging sensors, also known as imaging spectrometers, are electro-optical sensors (New generation of electro-optical sensors: hyperspectral imaging sensors or imaging spectrometers) that can collect image data in tens to hundreds of very narrow, continuous spectral bands across the visible to thermal infrared region of the spectrum. Many commercial airborne hyperspectral sensors (AVIRIS, CASI, HYDICE, HyMap, AISA, HySpex) have been developed and used for various remote sensing applications since the late 1980s. In this section, several commonly used airborne hyperspectral imaging systems are discussed.

The Airborne Visible/Infrared Imaging Spectrometer (AVIRIS)[42], developed by the Jet Propulsion Laboratory (JPL) in Pasadena, California, was the first hyperspectral imaging sensor that delivered 12-bit images in 224 spectral bands in the 400 to 2500nm spectral range. The AVIRIS instrument contains four detectors to cover the spectral ranges of 400-700nm, 700-1300nm, 1300-1900nm, and 1900-2500nm, respectively. When flown at 20km MSL at 730km/h, AVIRIS collects imagery with a pixel size of 20m and a ground swath of 11km. When imagery is collected at 4km MSL at 130km/h, it has a pixel size of 4m and a swath of 2km wide. Green et al.[49] provided an excellent overview of AVIRIS and examples of scientific research and applications on the use of the sensor. AVIRIS has been upgraded and improved in a continuous effort to meet the requirements for research and applications.

Hymap is an airborne hyperspectral imaging sensor developed and manufactured by Integrated Spectronics Pty Ltd (Sydney, Australia). Since 1996, HyMap airborne hyperspectral scanners have been deployed in many countries in North America, Europe, Africa and Australasia. The initial HyMap sensor had 96 channels in the 550-2500nm range and was designed primarily for mineral exploration[50]. The current HyMap sensor, operated by HyVista Corporation Pty Ltd (Sydney, Australia), provides 128 bands in the 450-2500nm spectral range. The system can be adapted into any aircraft with a standard camera port. Differential GPS and an IMU were used for geolocation and image geocoding. The sensor can be configured to capture 512-pixel-wide images at 3-10m spatial resolution for different applications[46-48,51].

The Compact Airborne Spectrographic Imager (CASI), first introduced in 1989 by ITRES Research Ltd. (Calgary, Alberta, Canada), was one of the first programmable image spectrometers. It allows users to program the sensor to collect hyperspectral data in specific bands and bandwidths for different applications. Currently, ITRES offers a suite of airborne hyperspectral sensors covering the visible to thermal spectral range. The CASI-1500H captures 14-bit hyperspectral images at up to 288 bands in the 380-1050nm spectral range with 1500 across-track pixels. It features a compact design with an embedded controller and integrated GPS/IMU. The SASI-1000A offers 1000-pixel-wide images at 200 bands in 950-2450nm to allow seamless VNIR-SWIR coverage in conjunction with the CASI-1500H. The MASI-600, the first commercially available MWIR airborne hyperspectral sensor, provides 600-pixel wide images at 64 bands in the 3-5µm spectral range, while the TASI-600 captures hyperspectral thermal images with the same swath at 32 bands in the 8-11.5µm range. ITRES also offers smaller versions of the sensors for UAS.

SPECIM, Spectral Imaging Ltd (Oulu, Finland) provides a family of airborne hyperspectral imaging systems (AISA family of airborne hyperspectral sensors: AisaEAGLE, AisaEAGLET, AisaHAWK, AisaDUAL, AisaOWL), including AisaKESTREL 10 in 400-1000nm, AisaKESTREL 16 in 600-1640nm, AisaFENIX and AisaFENIX 1K in 380-2500nm, and AisaOWL in 7.7-12.3µm. The AisaFENIX sensor can capture images with a swath of 384 pixels at up to 348 VNIR bands and 274 SWIR bands, while the AisaFENIX 1K sensor can capture images with a swath of 1024 pixels at up to 348 VNIR bands and 256 SWIR bands. The AisaOWL can obtain thermal images with a 384-pixel swath at 96 bands. AisaKESTREL 10 and AisaKESTREL 16 can also fit to UAS to capture hyperspectral images with a swath of 2040 and 640 pixels, respectively. All the sensors are integrated with a GPS/INS unit[51].

Headwall Photonics, Inc. (Fitchburg, Massachusetts, USA) offers a suite of hyperspectral imaging sensors covering the spectral range of 250 to 2500nm. Separate sensors are available for UV to visible (250-500nm), VNIR (380-1000nm), NIR (900-1700nm), and SWIR (950-2500nm) spectral ranges. It also offers a co-registered VNIR-SWIR imaging sensor with 400 to 2500nm coverage. The hyperspectral imaging system at USDA-ARS in College Station, Texas consists of a Headwall HyperSpec VNIR E-Series imaging spectrometer (upper left of Fig. 1), an integrated GPS/IMU, and a hyperspectral data processing unit. The spectrometer can capture 16-bit images with a 1600-pixel-wide swath at up to 923 bands in the 380 to 1000nm range. At 1000m AGL, the hyperspectral camera covers a swath of 615m with a pixel size of 38cm[52].

4 Airborne imagery application examples

Airborne multispectral imagery has been widely used in precision agriculture for assessing soil properties[52,53], mapping crop growth and yield variability[54-56], and detecting water stress[57], weed infestations[58], crop insect damage[59], and disease infections[30,53,60]. Moran et al.[27] reviewed the potential of image-based remote sensing to provide spatial and temporal data for precision agriculture applications. More recently, Mulla

[61] reviewed the key advances of remote sensing in precision agriculture and identified the knowledge gaps. Airborne hyperspectral imagery has been evaluated for assessing soil fertility, estimating crop biophysical parameters[62], mapping crop yield variability[63,64], and detecting crop pests and diseases[65-68]. In this section, five application examples based on the work performed by the author and his collaborators are provided to illustrate how multispectral and hyperspectral imagery has been used for crop growth assessment and crop disease management for practical precision agriculture applications.

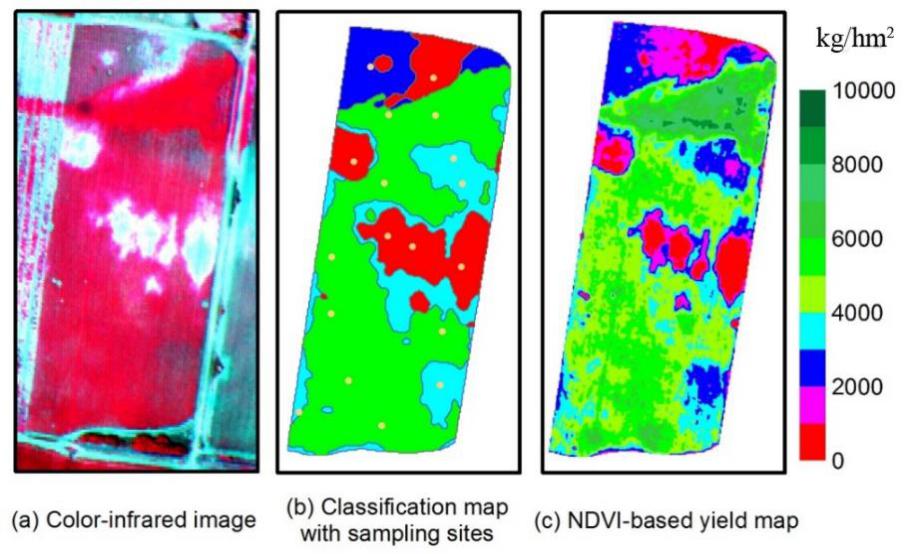

4.1 Color-infrared imagery for creating management zones and yield maps

Identification of management zones is a first important step for site-specific applications of farming inputs (i.e., nutrients, water, and pesticides) in precision agriculture. In addition to soil sampling and yield mapping, remote sensing provides an alternative for establishing management zones within fields. Yang and Anderson[69] demonstrated how to use airborne multispectral imagery to delineate within-field management zones and to map yield variability. They employed an airborne three-camera imaging system described by Everitt et al.[31] for image acquisition. The system consisted of three CCD cameras fitted with a green filter (555-565nm), a red filter (625-635nm) and a NIR filter (845-857nm), respectively. The green, red and NIR signals from the three cameras were digitized and combined to produce 8 bit CIR composite images with 640×480 pixels.

Fig. 3(a) shows a CIR image for a 6hm2 grain sorghum field in south Texas. The image was acquired at an attitude of 1300m with a pixel size of 1.4m. On the CIR image, healthy plants have a reddish color, while chlorotic plants and areas with large soil exposure have a whitish and grayish tone. The CIR image was classified into four spectral zones using an unsupervised classification technique (Fig. 3(b)). The blue zone represented water-stressed areas due to the high ground elevation. The red zone depicted areas where plants suffered severe chlorosis due to iron deficiency. The other two zones (cyan and green) represented areas with growth variability attributed to a combination of soil and environmental factors. The advantage of classifying image data into discrete zones is the reduced variance within each zone. These zones can be used as management zones for guided plant and soil sampling and for other precision farming operations. A stratified random sampling approach was used to generate a limited number of sampling sites to identify the cause of the variation for the field (Fig. 3(b)).

Correlation analysis showed that grain yield was significantly related to NDVI (r=0.95). The implication of this strong correlation is that a grain yield map could be generated from the image based on the regression equation between yield and NDVI (Fig. 3(c)). Compared with the CIR image and classification map, the yield map has a similar spatial pattern, but reveals more variations within the zones. This example illustrates how airborne multispectral imagery can be used in conjunction with GPS, geographic information systems (GIS), ground sampling and image processing techniques for identifying management zones and mapping within-field yield variability. Unsupervised classification is effective to classify the image into spectral zones with different growth conditions and production levels. Multispectral imagery has also been proven to be instrumental in modeling yield spatial variability.

Fig. 3 Airborne image and maps for a 6hm2 grain sorghum field in south Texas (Adapted from [69])

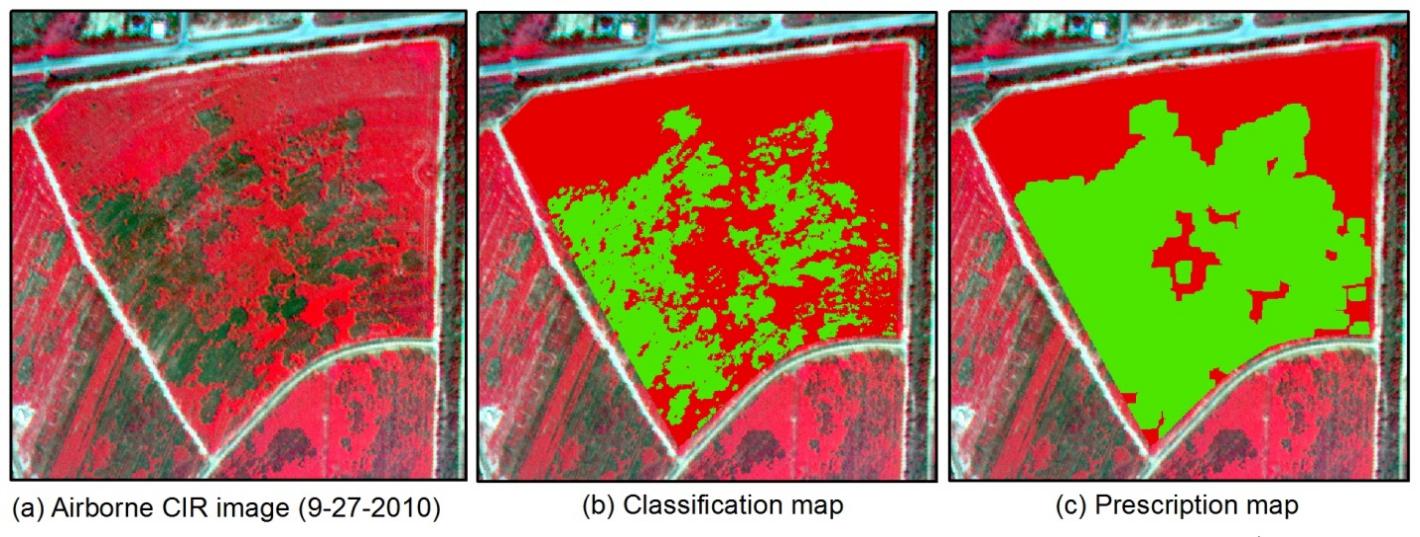

4.2 Four-band imagery for mapping cotton root rot

Cotton root rot, caused by the soilborne fungus Phymatotrichopsis omnivora, is a destructive disease that has affected the cotton industry for over a century. Historical airborne images taken from infested fields have demonstrated that this disease tends to occur in the same general areas within fields year after year[30]. The unique spatial nature makes cotton root rot an excellent candidate for site-specific management.

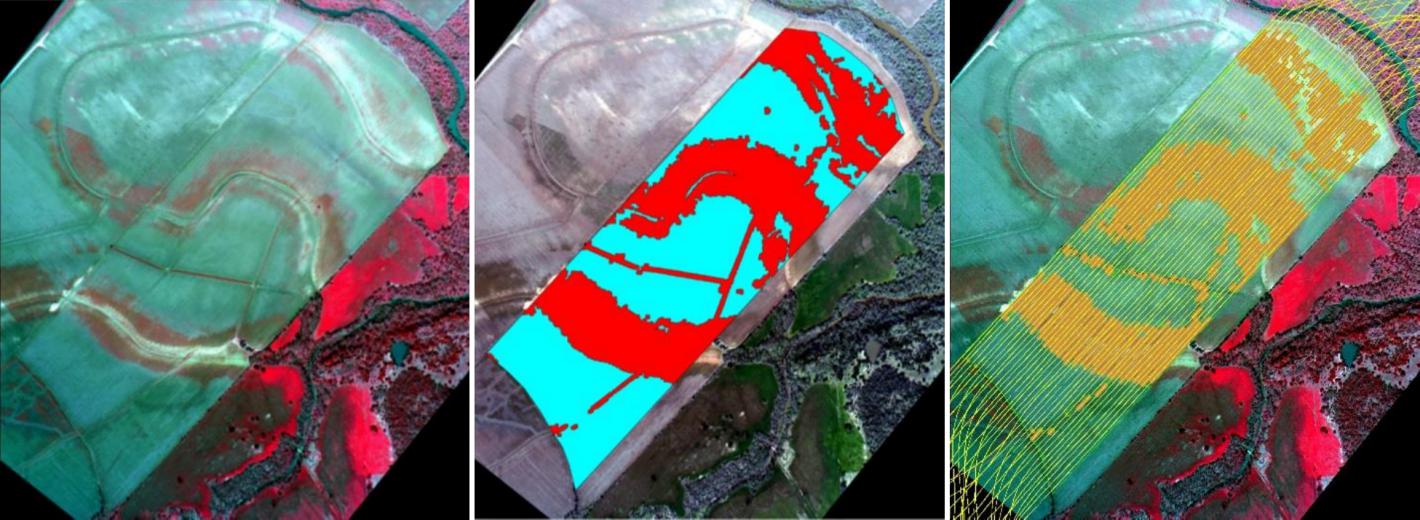

Fig. 4(a) shows a CIR image captured with the four-camera system shown in Fig. 1 from an 11hm2 cotton field near San Angelo, Texas. A corresponding two-zone classification map and a two-zone prescription map are also shown in Fig. 4(b). Root rot-infected plants had a dark gray color on the CIR image, compared with non-infected plants that had a reddish tone. The two-zone classification showed that approximately 37% of the field was infested. A 5m buffer was added to the infested areas on the classification map to increase treatment areas to 63% in the prescription map (Fig. 4(c)). The buffer effectively eliminated the small non-infested areas between the infested areas, which not only accommodated the potential expansion of the disease, but also made site-specific application more practical with large farm machinery.

A variable rate control system was adapted to an existing tractor and planter system for fungicide application to the field at planting. The system applied the desired rate to the prescribed areas with an error of 1.5%. Post-treatment airborne imagery showed that the fungicide effectively controlled cotton root rot in the infested areas. Simple economic analysis indicated that the savings in fungicide use could offset the initial investment on the variable rate controller if treatment area could be reduced by 40hm2. For some large fields, reduction in treatment area in one single field can exceed that number. Therefore, there is a great potential for savings with site-specific management of this disease.

Fig. 4 Airborne image and maps for an 11hm2 cotton field infested with cotton root rot near San Angelo, Texas. The green areas in the prescription map were treated with fungicide (Adapted from [70])

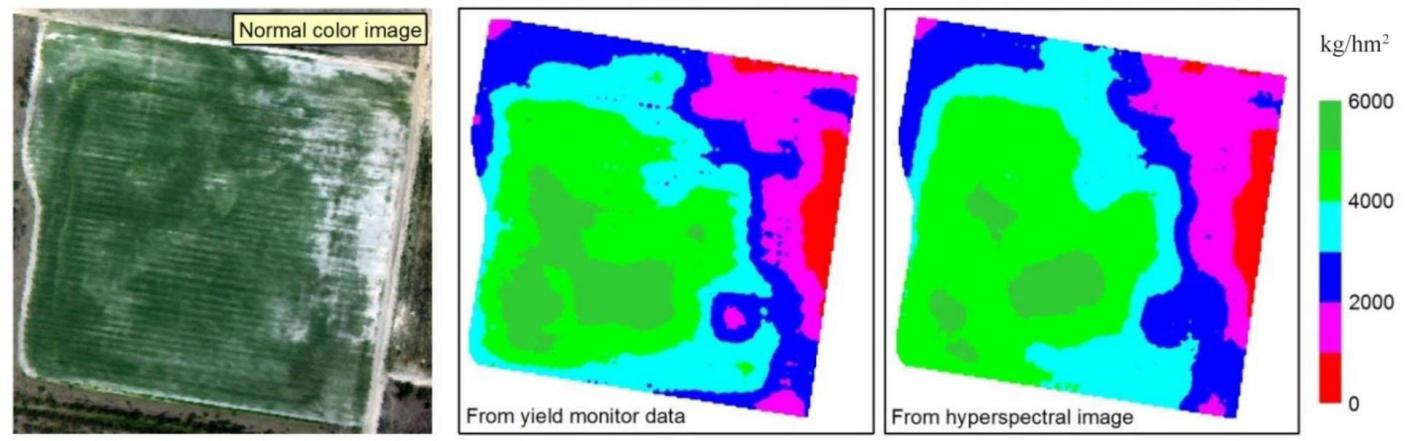

4.3 Hyperspectral imagery for mapping crop yield variability

Hyperspectral imagery has the potential to provide better estimation of biophysical parameters than multispectral imagery due to its fine spectral resolution. Yang et al[71]. evaluated airborne hyperspectral imagery for mapping crop yield variability as compared with yield monitor data. An airborne hyperspectral imaging system described by Yang et al.[72] was used for acquiring imagery from a 14hm2 grain sorghum field in south Texas. The system was configured to capture 12-bit images with a swath of 640 pixels at 128 bands in the 450 to 920nm spectral range. After the noisy bands in the lower and higher wavelengths were removed, the remaining 102 bands with wavelengths from 475 to 845nm were used for analysis. Yield data were collected from the field using a Yield Monitor 2000 system mounted on the harvest (Ag Leader Technology, Ames, Iowa, USA).

Fig. 5 shows a RGB color image composite from the hyperspectral image for the field. The image was taken around the peak plant development for the crop. The light grayish color in the northeast portion of the field were mainly due to the thin plant stand over very sandy soil. Correlation analysis showed that grain yield was significantly negatively related to the visible bands and positively related to the NIR bands with correlation coefficients ranging from -0.80 to 0.84. Principal component analysis was applied to the image to eliminate data redundancy. Stepwise regression analysis based on the first ten principal components showed that five of the ten principal components were significant and explained 80% of the variability in yield. To identify significant bands, stepwise regression was performed directly on the yield data and the 102-band hyperspectral image data. Seven bands (481, 543, 713, 731, 735, 771, and 818nm) were identified to be significant and explained about 82% of the variability in yield. Fig. 5 shows the yield maps generated from yield monitor data and from the seven significant bands in the image. The spatial patterns on both yield maps are similar, indicating that grain yield can be estimated from airborne hyperspectral imagery acquired around peak growth during the growing season.

Fig. 5 RGB color image and yield maps generated from yield monitor data and based on seven significant bands in a 102-band hyperspectral image for a 14hm2 grain sorghum field (Adapted from [69])

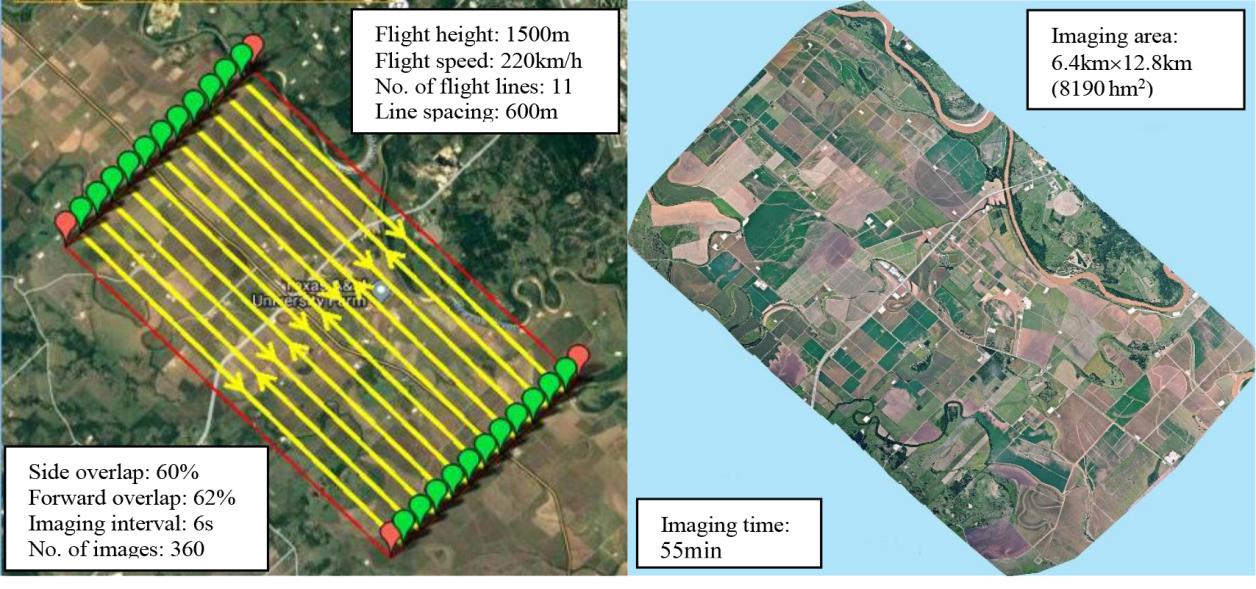

4.4 Crop monitoring with consumer-grade cameras

As discussed in Section 2.2, consumer-grade cameras can also be used to acquire RGB and NIR images like the industrial cameras in Example 4.1 and 4.2. Depending on the camera and flight height, a single image can cover a portion of a field, the whole field, multiple fields or even a whole farm. For example, at 300m AGL, the Nikon D90 camera can cover a ground area of 300m×200m or 6hm2 with a pixel size of 8.6cm. At 3000m AGL, the same camera can cover an area of 3000m×2000m or 600hm2 with a pixel size of 86cm. Clearly, if flight height increases to 10 times the initial height, ground coverage increases to 100 times the initial coverage and pixel size is 10 times the initial value.

If a single image cannot cover the area of interest with the required pixel size, multiple images can be taken along multiple flight lines. For example, to map a 6.4km×12.8km (8190hm2) cropping area near College Station, Texas (red box in Fig. 6), a dual-camera system consisting of two Nikon D90 cameras was mounted on an Air Tractor 402B agricultural aircraft. The cameras were flown at 1500m AGL along 11 flight lines spaced 600m apart (yellow lines in Fig. 6). With a ground speed of 220km/h and an imaging interval of 6s, a total of 360 pairs of geotagged RGB and NIR images were acquired with a side overlap of 60% and a forward overlap of 62%. It took about 55 minutes to fly the whole imaging area. The images were then mosaicked using Pix4Dmapper software (Pix4D SA, Lausanne, Switzerland), and a pair of orthomosaic RGB and NIR images and a 3D digital surface model were created.

If the same dual-camera system is mounted on a UAS flying at the maximum flight height allowed by the FAA (400ft or 122m), each image will only cover a ground area of 122*81.3m

2 (1hm2) with a pixel size of 2.8cm. To image the 8190hm2 area with the same side and forward overlap as used for the manned aircraft, it would take our AG-V6A hexacopter (HSE LLC, Denver, Colorado, USA), which allows a maximum ground speed of 10m/s, about 62 continuous hours fly 144 lines to complete, resulting in approximately 56,800 images. As the battery used for the UAS can only last about 15 minutes, multiple batteries are needed. With frequent battery changes and additional time for takeoff and landing, the actual flight time will be much longer, not to mention the weather factor. Moreover, it will take days, if not weeks, to mosaic the large number of images. Clearly, UAS-based imaging will be more appropriate if high-resolution imagery is needed over relatively small areas. Even with faster flight speed and longer battery endurance, it is still not practical to map large areas with the current FAA regulations.

Fig. 6 Flight lines (left) for taking images using a pair of RGB and NIR consumer-grade cameras and a mosaicked RGB image (right) for a cropping area near College Station, Texas.

The mosaicked RGB and NIR images from the manned aircraft were stacked as a multispectral image from which various VIs such as NDVI were derived for crop monitoring. As an example, Zhang et al.[17] used the mosaicked multispectral image to identify crop types and estimate crop leaf area index (LAI). Other uses of the image included within-field variability assessment for fertilizer and plant growth regulator applications for cotton, crop disease detection and other precision agriculture applications.

4.5 Multispectral imagery for site-specific aerial herbicide application

Variable rate or precision aerial application started receiving attention in the early 2000s when variable rate spray systems became available for agricultural aircraft[73,74]. In recent years, more and more aerial applicators feel that they need to adopt new technologies such as remote sensing and variable rate application in their operations to stay competitive. To address the need for practical methodologies for site-specific aerial applications of crop production and protection materials, Yang and Martin

[58] demonstrated how to integrate an airborne multispectral imaging system and a variable rate aerial application system for site-specific management of the winter weed henbit. The imaging system consisting of two consumer-grade cameras was used to acquire RGB and NIR images from a fallow henbit-infested field near College Station, Texas. A prescription map was created from the images to only apply the glyphosate herbicide on infested areas.

Fig. 7 shows a CIR image with henbit infestations and a prescription map derived from the NDVI classification map with a 5m buffer added to the infested areas. The henbit-infested area with the buffer was estimated to be 56.4hm2 or 45.5% of the total area (124hm2). An Air IntelliStar variable rate system (Satloc, Hiawatha, Kansas) mounted on the Air Tractor AT-402B agricultural aircraft was used to apply glyphosate over the field based on the prescription map. The as-applied map and the imagery collected two weeks after the herbicide application were used to assess the performance of the site-specific application. Spatial and statistical analysis results showed that the imaging system was effective for mapping henbit infestations and for assessing the performance of site-specific herbicide application, and that the variable rate system accurately delivered the product at the desired rate to the prescribed areas for effective control of the weed. The methodology and results from this study are useful for aerial applicators to incorporate airborne imaging and variable rate application systems into their aerial application business to increase their capabilities and profits.

(a) airborne color-infrared image (b) prescription map (c) as-applied map

Fig. 7 Images for a 124hm2 fallow field infested with the henbit weed near College Station, Texas (Adapted from[68])

5 Challenges and future efforts

The availability of perse remote sensing platforms and imaging systems today presents both tremendous opportunities and great challenges to remote sensing practitioners and users. Although this review focuses on airborne imaging systems based on manned aircraft, the other two major types of platforms (i.e., satellites and UAS) are also discussed and compared with manned aircraft. There are many challenges facing the use of remote sensing for precision agriculture. Some of the challenges include platform selection, type of images to collect, image acquisition and delivery, image conversion to final products, and practical use of the image products.

To select an appropriate platform and/or imaging system, various factors (e.g., the size of the area, image type and resolution requirements, and time and cost constraints) need to be considered for a particular application. In general, UAS will be more appropriate if very high-resolution imagery is needed for a small area. For imaging over large fields or at a farm level, manned aircraft will be more effective. However, if manned aircraft is not available, satellite imagery will be a good choice as it can cover a large area with relatively fine spatial resolution. Although farmers are aware of the availability of airborne and satellite imagery, most of them are not clear which type of imagery to select and how to order it. Therefore, image providers and vendors need to provide better education to help customers select and order their image products.

The common types of imagery include multispectral (RGB, NIR, and red edge), hyperspectral, and thermal imagery. For most precision agriculture applications, RGB and NIR imagery is necessary, though RGB imagery alone may be sufficient for some applications. Hyperspectral imagery is currently provided by manned aircraft and UAS as few satellite hyperspectral sensors are available. Hyperspectral and thermal imagery is only needed when RGB and NIR imagery cannot meet the requirement. When to collect imagery is another issue. Depending on the purposes (e.g., nutrient assessment, water status, pest detection, and yield estimation), optimum image acquisition dates differ. More research is needed to identify the optimum periods for different applications.

Timely acquisition and delivery of imagery are very important. Most current satellites have a revisit period of 1-5 days, the actual image acquisition time depends on local weather conditions and the competition with other customers in the similar geographic area. Since most high-resolution satellites cover relatively narrow swath, these sensors can be tilted at an angle to take off-nadir images over tasked areas along the path. As more satellite sensors are launched, customers can order imagery from multiple satellite sensors owned by a company to increase the chances for timely imagery. The complete PlanetScope constellation will eventually be able to image the entire Earth every day.

How to convert imagery into useable maps and information is one of the biggest challenges. Although numerous image processing techniques are available to convert imagery into classification maps and vegetation index maps, it is not always easy to convert these maps to prescription maps for variable rate application. The same NDVI map may show differences in soil, yield and pest infestations. Image processing software has different capabilities, complexities, and prices. Most growers may not have the skills and time to process image data. Image processing is a specialized field and requires advanced computer skills and a basic understanding of the techniques involved. If this is not practical for some growers, they can always use a commercial image processing service for creating relevant maps. Many agricultural dealerships are providing services for image acquisition, prescription map creation and variable rate application for precision agriculture.

Disclaimer

Mention of trade names or commercial products in this chapter is solely for the purpose of providing specific information and does not imply recommendation or endorsement by the U.S. Department of Agriculture. USDA is an equal opportunity provider and employer.

References

[1] Campbell J B. Introduction to Remote Sensing (3rd ed)[M]. New York: The Guilford Press, 2002.

[2] Chen X, Ma J, Qiao H, et al. Detecting infestation of take-all disease in wheat using Landsat Thematic Mapper imagery[J]. International Journal of Remote Sensing, 2007, 28(22): 5183-5189.

[3] Satellite Imaging Corporation[EB/OL]. [2020-1-11]. https://www.satimagingcorp.com/satellite-sensors/.

[4] Planet Labs Inc[EB/OL]. [2020-1-11]. https://www.planet.com/products/planet-imagery/.

[5] Yang C. High resolution satellite imaging sensors for precision agriculture[J]. Frontiers of Agricultural Science and Engineering, 2018, 5(4): 393-405.

[6] Wikipedia[EB/OL]. [2020-1-11]. https://en.wikipedia.org/wiki/Light-sport_aircraft.

[7] Federal Aviation Administration. Light sport aircraft[EB/OL]. [2019-9-6]. https://www.faa.gov/aircraft/gen_av/light_sport/.

[8] Zhao B, Zhang J, Yang C, et al. Rapeseed seedling stand counting and seeding performance evaluation at two early growth stages based on unmanned aerial vehicle imagery[J]. Frontiers in Plant Science, 2018, 9: 1362.

[9] Holman F H, Riche A B, Michalski A, et al. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing[J]. Remote Sensing, 2016, 8(12): 1031.

[10] Varela S, Assefa Y, Prasad P V V, et al. Spatio-temporal evaluation of plant height in corn via unmanned aerial systems[J]. Journal of Applied Remote Sensing, 2017, 11(3): 36013.

[11] Roth L H, Aasen A, Walter F, et al. Extracting leaf area index using viewing geometry effects - A new perspective on high-resolution unmanned aerial system photography[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2018, 141: 161-175.

[12] Bendig J, Yu K, Aasen H, et al. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley[J]. International Journal of Applied Earth Observation and Geoinformation, 2015, 39: 79-87.

[13] Yue J, Yang G, Li C, et al. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models[J]. Remote Sensing, 2017, 9(7): no.708.

[14] Kefauver S C, Vicente R, Vergara-Díaz O, et al. Comparative UAV and field phenotyping to assess yield and nitrogen use efficiency in hybrid and conventional barley[J]. Frontiers in Plant Science, 2017, 8: no.1733.

[15] Gong Y, Duan B, Fang S, et al. Remote estimation of rapeseed yield with unmanned aerial vehicle (UAV) imaging and spectral mixture analysis[J]. Plant Methods, 2018, 14: 70.

[16] Hassan M A, Yang M, Rasheed A, et al. A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multispectral UAV platform[J]. Plant Science, 2019, 282: 95-103.

[17] Zhang L, Zhang H, Niu Y, et al. Mapping maize water stress based on UAV multispectral remote sensing[J]. Remote Sensing, 2019, 11: no.605.

[18] Torres-Sánchez J, López-Granados F, De Castro A I, et al. Configuration and specifications of an unmanned aerial vehicle (UAV) for early site-specific weed management[J]. PLoS ONE, 2013, 8(3): no.e58210.

[19] Jiménez-Brenes F M, López-Granados F, Torres-Sánchez J, et al. Automatic UAV-based detection of Cynodon dactylon for site-specific vineyard management[J]. PLoS ONE, 2019, 14(6): no.e0218132.

[20] Cao F, Liu F, Guo H, et al. Fast detection of Sclerotinia sclerotiorum on oilseed rape leaves using low-altitude remote sensing technology[J]. Sensors, 2018, 18(12): no. 4464.

[21] Heim R H J, Wright I J, Scarth P, et al. Multispectral, aerial disease detection for myrtle rust (Austropuccinia psidii) on a lemon myrtle plantation[J]. Drones, 2019, 3: 25.

[22] Caturegli L, Corniglia M, Gaetani M, et al. Unmanned aerial vehicle to estimate nitrogen status of turfgrasses[J]. PLoS ONE, 2016, 11(6): no.e0158268.

[23] Vanegas F, Bratanov D, Powell K, et al. A novel methodology for improving plant pest surveillance in vineyards and crops using UAV-based hyperspectral and spatial data[J]. Sensors, 2018, 18: no.260.

[24] Federal Aviation Administration. Certificated remote pilots including commercial operates[EB/OL]. [2019-9-4]. https://www.faa.gov/uas/commercial_operators/.

[25] Mausel P W, Everitt J H, Escobar D E, et al. Airborne videography: current status and future perspectives[J]. Phtotogrammetric Engineering & Remote Sensing, 1992, 58(8): 1189-1195.

[26] King D J. Airborne multispectral digital camera and video sensors: a critical review of systems designs and applications[J]. Canadian Journal of Remote Sensing, 1995, 21(3): 245-273.

[27] Moran M S, Inoue Y, Barnes E M. Opportunities and limitations for image-based remote sensing in precision crop management[J]. Remote Sensing of Environment, 1997, 61(3): 319-346.

[28] Moran M S, Fitzgerald G, Rango A, et al. Sensor development and radiometric correction for agricultural applications[J]. Photogrammetric Engineering & Remote Sensing, 2003, 69(6): 705-718.

[29] PinterJr P J, Hatfield J L, Schepers J S, et al. Remote sensing for crop management[J]. Photogrammetric Engineering & Remote Sensing, 2003, 69(6): 647-664.

[30] Yang C, Odvody G N, Thomasson, J A, et al. Change detection of cotton root rot infection over 10-year intervals using airborne multispectral imagery[J]. Computers and Electronics in Agriculture, 2016, 123: 154-162.

[31] Everitt J H, Escobar D E, Cavazos I, et al. A three-camera multispectral digital video imaging system[J]. Remote Sensing of Environment, 1995, 54(3): 333-337.

[32] Escobar D E, Everitt J H, Noriega J R, et al. A twelve-band airborne digital video imaging system (ADVIS)[J]. Remote Sensing of Environment, 1998, 66(2): 122-128.

[33] Gorsevski P V, Gessler P E. The design and the development of a hyperspectral and multispectral airborne mapping system[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2009, 64(2): 184-192.

[34] Yang C. A high resolution airborne four-camera imaging system for agricultural applications remote sensing[J]. Computers and Electronics in Agriculture, 2012, 88: 13-24.

[35] Airborne Data Systems, Inc[EB/OL]. [2020-1-11]. http://www.airbornedatasystems.com/.

[36] OptechTeledyne[EB/OL]. [2020-1-11]. http://www.teledyneoptech.com/en/products/airborne-survey/.

[37] SystemsFLIR, Inc[EB/OL]. [2020-1-11]. https://www.flir.com/browse/rampd-and-science/high-performance-cameras/.

[38] ITRES Research Limited[EB/OL]. [2020-1-11]. https://www.itres.com/.

[39] Bayer B E. Color Imaging Array: US Patent 3971065[P]. 1976.

[40] Hirakawa K, Wolfe P J. Spatio-spectral sampling and color filter array design[C]// In Single-Sensor Imaging: Methods and Applications for Digital Cameras, Lukac, R (ed), CRC Press, Boca Raton, Florida, USA, 2008.

[41] Song X, Yang C, Wu M, et al. Evaluation of Sentinel-2A imagery for mapping cotton root rot[J]. Remote Sensing, 2017, 9(9): 906.

[42] Airborne Visible InfraRed Imaging Spectrometer (AVIRIS) [EB/OL]. [2020-1-11]. https://aviris.jpl.nasa.gov/.

[43] Yang C, Hoffmann W C. Converting aerial imagery to application maps[J]. Agricultural Aviation, 2016, 43(4): 72-74.

[44] Westbrook J K, Ritchie S E, Yang C, et al. Airborne multispectral identification of inpidual cotton plants using consumer-grade cameras[J]. Remote Sensing Applications: Society and Environment, 2016, 4: 37-43.

[45] Schiefer S, Hostert P, Damm A. Correcting brightness gradients in hyperspectral data from urban areas[J]. Remote Sensing of Environment, 2006, 101(1): 25-37.

[46] Dehaan R L, Taylor G R. Field-derived spectra of salinized soils and vegetation as indicators of irrigation-induced soil salinization[J]. Remote Sensing of Environment, 2002, 80(3): 406-417.

[47] Galvao L S, Ponzoni F J, Epiphanio J C N, et al. Sun and view angle effects on NDVI determination of land cover types in the Brazilian Amazon region with hyperspectral data[J]. International Journal of Remote Sensing, 2004, 25(10): 1861-1879.

[48] Cocks T, Jenssen R, Stewart A, et al. The HyMAP airborne hyperspectral sensor: The system, calibration and performance[C]// 1st EARSeL Workshop on Imaging Spectroscopy, Univ Zurich, Remote Sensing Lab, Zurich, Switzerlands, 1998.

[49] Yang C, Hoffmann W C. Low-cost single-camera imaging system for aerial applicators[J]. Journal of Applied Remote Sensing, 2015, 9: no.096064.

[50] Green R O, Eastwood M L, Sarture C M, et al. Imaging Spectroscopy and the airborne visible/infrared imaging spectrometer (AVIRIS)[J]. Remote Sensing of Environment, 1998, 65(3): 227-248.

[51] Spectral Imaging Ltd[EB/OL]. [2020-1-11]. https://www.specim.fi/afx/#1576656091402-52f5761e-8ca1.

[52] PhotonicsHeadwall, Inc[EB/OL]. [2020-1-11]. https://www.headwallphotonics.com/fov-calculator.

[53] Varvel G E, Schlemmer M R, Schepers J S. Relationship between spectral data from an aerial image and soil organic matter and phosphorus levels[J]. Precision Agriculture, 1999, 1(3): 291-300.

[54] Yang C, Everitt J H. Relationships between yield monitor data and airborne multidate multispectral digital imagery for grain sorghum[J]. Precision Agriculture, 2002, 3(4): 373-388.

[55] Inman D, Khosla R, Reich R, et al. Normalized difference vegetation index and soil color-based management zones in irrigated maize[J]. Agronomy Journal, 2008, 100(1): 60-66.

[56] Sui R, Hartley B E, Gibson J M, et al. Yield estimate of biomass sorghum with aerial imagery[J]. Journal of Applied Remote Sensing, 2011, 5(1): no.053523.

[57] Cohen Y, Alchanatis V, Saranga Y, et al. Mapping water status based on aerial thermal imagery: comparison of methodologies for upscaling from a single leaf to commercial fields[J]. Precision Agriculture, 2017, 18(5): 801-822.

[58] Yang C, Martin D E. Integration of aerial imaging and variable rate technology for site-specific aerial herbicide application [J]. Transactions of the ASABE, 2017, 60(3): 635-644.

[59] Backoulou G F, Elliott N C, Giles K L, et al. Processed multispectral imagery differentiates wheat crop stress caused by greenbug from other causes[J]. Computers and Electronics in Agriculture, 2015, 115: 34-39.

[60] Yang C, Martin D E. Integration of aerial imaging and variable rate technology for site-specific aerial herbicide application [J]. Transactions of the ASABE, 2017, 60(3): 635-644.

[61] Mulla D J. Twenty five years of remote sensing in precision agriculture-Key advances and remaining knowledge gaps[J]. Biosystems Engineering, 2013, 114 (4): 358-371.

[62] Goel P K, Prasher S O, Landry J A, et al. Estimation of crop biophysical parameters through airborne and field hyperspectral remote sensing[J]. Transactions of the ASAE, 2003, 46(4): 1235-1246.

[63] Zarco-Tejada, P J, Ustin, S L, et al. Temporal and spatial relationships between within-field yield variability in cotton and high-spatial hyperspectral remote sensing imagery[J]. Agronomy Journal, 2005, 97(3): 641-653.

[64] Yang C, Everitt J H, Bradford J M. Airborne hyperspectral imagery and linear spectral unmixing for mapping variation in crop yield [J]. Precision Agriculture, 2007, 8(6): 279-296.

[65] Fitzgerald G J, Maas S J, Detar W R. Spidermite detection in cotton using hyperspectral imagery and spectral mixture analysis [J]. Precision Agriculture, 2004, 5(3): 275-289.

[66] Huang W, David W L, Niu Z, et al. Identification of yellow rust in wheat using in situ spectral reflectance measurements and airborne hyperspectral imaging[J]. Precision Agriculture, 2007, 8(4-5): 187-197.

[67] Li H, Lee W S, Wang K, et al. Extended spectral angle mapping (ESAM) for citrus greening disease detection using airborne hyperspectral imaging[J]. Precision Agriculture, 2014, 15(2): 162-183.

[68] MacDonald S L, Staid M, Staid M, et al. Remote hyperspectral imaging of grapevine leafroll-associated virus 3 in cabernet sauvignon vineyards[J]. Computers and Electronics in Agriculture, 2016, 130: 109-117.

[69] Yang C, Anderson G L. Airborne videography to identify spatial plant growth variability for grain sorghum[J]. Precision Agriculture, 1999, 1(1): 67-79.

[70] Yang C, Anderson G L. Mapping grain sorghum yield variability using airborne digital videography[J]. Precision Agriculture, 2000, 2(1): 7-23.

[71] Yang C, Everitt J H, Bradford J M. Airborne hyperspectral imagery and yield monitor data for estimating grain sorghum yield variability[J]. Transactions of the ASAE, 2004, 47(3): 915-924.

[72] Yang C, Everitt J H, Davis M R, et al. A CCD camera-based hyperspectral imaging system for stationary and airborne applications[J]. Geocarto International, 2003, 18(2): 71-80.

[73] Smith L A, Thomson S J. GPS position latency determination and ground speed calibration for the Satloc Airstar M3[J].Applied Engineering in Agriculture, 2005, 21(5): 769-776.

[74] Thomson S J, Smith L A, Hanks J E. Evaluation of application accuracy and performance of a hydraulically operated variable-rate aerial application system[J]. Transactions of the ASABE, 2009, 52(3): 715-722.

Received date: 2019-09-24 Revised date: 2019-12-07

Biography: Chenghai Yang(1962-), Research Agricultural Engineer, research interests: remote sensing for precision agriculture and pest management, Tel: 1-979-260-9530, Email: chenghai.yang@usda.gov.

doi: 10.12133/j.smartag.2020.2.1.201909-SA004